TokenCounter

Browser-based token counting and cost estimation tool for multiple popular large language models (LLMs).

Community:

Product Overview

What is TokenCounter?

TokenCounter is a sophisticated and privacy-focused tool designed to accurately count tokens and estimate usage costs for a wide range of widely-used LLMs such as GPT-4, Claude-3, Llama-3, and others. It operates entirely client-side in the browser using efficient tokenizers from the Transformers.js library, ensuring prompt data never leaves the user's device. This enables developers, researchers, and AI users to optimize prompt length, manage budgets, and avoid token limit errors effectively without compromising data privacy.

Key Features

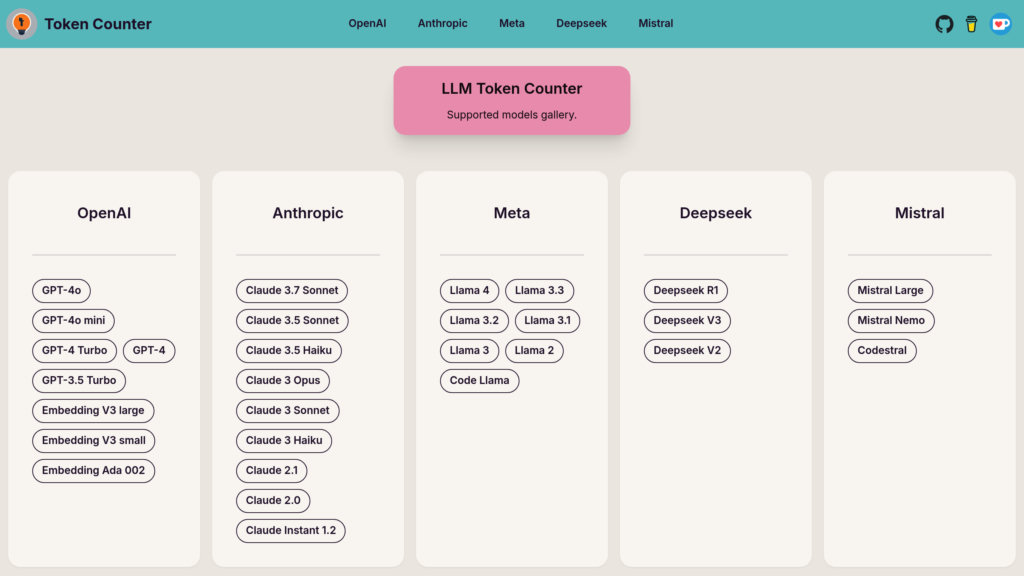

Multi-Model Token Counting

Supports tokenization for numerous popular LLMs including OpenAI, Anthropic, Meta, and more, providing accurate token counts tailored to each model's specific tokenizer.

Client-Side Privacy

Performs all token counting locally in the browser, ensuring that user prompts remain confidential and are not transmitted to any server.

Real-Time Token and Cost Estimation

Instantly displays token counts and estimates input costs as users type or paste text, enabling efficient prompt optimization.

Browser-Based and Easy to Use

No installation required; runs purely in-browser with a user-friendly interface suitable for both beginners and experts.

Continuous Model Support Expansion

Regularly updated to include more LLMs and improve token counting accuracy, reflecting the evolving AI landscape.

Use Cases

- Prompt Optimization : Helps AI developers and users tailor prompts to fit within token limits to avoid errors and reduce unnecessary costs.

- Cost Management : Enables budgeting and cost estimation for API usage by calculating tokens and estimating expenses before sending requests.

- Research and Development : Supports AI researchers in analyzing token usage patterns across different models for experimental and comparative studies.

- Educational Tool : Assists learners and AI enthusiasts in understanding tokenization and model-specific token limits through hands-on interaction.

FAQs

TokenCounter Alternatives

FuriosaAI

High-performance, power-efficient AI accelerators designed for scalable inference in data centers, optimized for large language models and multimodal workloads.

Predibase

Next-generation AI platform specializing in fine-tuning and deploying open-source small language models with unmatched speed and cost-efficiency.

Cerebrium

Serverless AI infrastructure platform enabling fast, scalable deployment and management of AI models with optimized performance and cost efficiency.

Not Diamond

AI meta-model router that intelligently selects the optimal large language model (LLM) for each query to maximize quality, reduce cost, and minimize latency.

Inferless

Serverless GPU platform enabling fast, scalable, and cost-efficient deployment of custom machine learning models with automatic autoscaling and low latency.

Unify AI

A platform that streamlines access, comparison, and optimization of large language models through a unified API and dynamic routing.

Cirrascale Cloud Services

High-performance cloud platform delivering scalable GPU-accelerated computing and storage optimized for AI, HPC, and generative workloads.

TrainLoop AI

A managed platform for fine-tuning reasoning models using reinforcement learning to deliver domain-specific, reliable AI performance.

Analytics of TokenCounter Website

🇸🇬 SG: 17.76%

🇺🇸 US: 11.65%

🇮🇳 IN: 9.68%

🇻🇳 VN: 8.28%

🇰🇭 KH: 6.9%

Others: 45.73%