Inferless

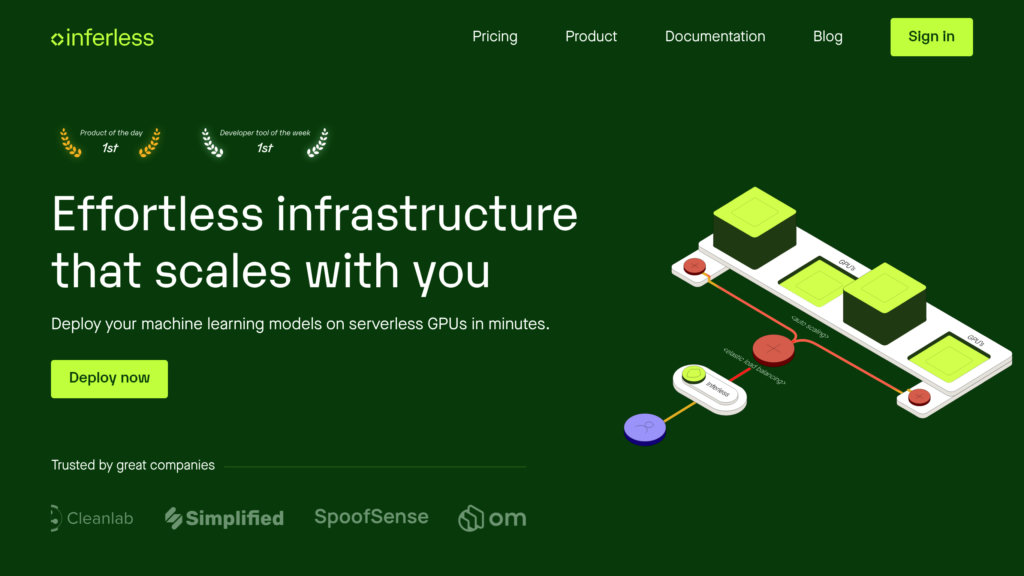

Serverless GPU platform enabling fast, scalable, and cost-efficient deployment of custom machine learning models with automatic autoscaling and low latency.

Community:

Product Overview

What is Inferless?

Inferless is a cutting-edge serverless GPU inference platform designed to simplify and optimize the deployment of machine learning models. It offers developers a seamless way to deploy models from sources like Hugging Face, Git, and Docker with minimal configuration, enabling rapid scaling from zero to hundreds of GPUs on demand. By leveraging an infrastructure-aware load balancer and dynamic batching, Inferless maximizes GPU utilization, reduces cold-start latency to seconds, and provides automated CI/CD pipelines. Its secure, isolated environments and customizable runtimes cater to diverse AI workloads, including LLM chatbots, computer vision, and audio generation, making it ideal for production-grade ML inference at scale.

Key Features

Serverless GPU Autoscaling

Automatically scales GPU resources up or down based on real-time demand, ensuring cost efficiency and consistent performance even with spiky workloads.

Dynamic Batching

Combines multiple inference requests into single batches on the server side to optimize GPU throughput and reduce latency.

Custom Runtime Support

Allows users to define container environments with specific software dependencies tailored to their model requirements.

Automated CI/CD Integration

Enables automatic model rebuilds and deployments, eliminating manual intervention and accelerating development cycles.

NFS-like Writable Volumes

Supports simultaneous connections across replicas for efficient data sharing and storage.

Comprehensive Monitoring and Logging

Provides detailed call and build logs, performance metrics, and separated inference/build logs for easier debugging and refinement.

Use Cases

- Large Language Model (LLM) Chatbots : Deploy scalable and responsive chatbots powered by advanced language models with minimal latency.

- AI Agents and Automation : Run AI-driven agents that require dynamic scaling to handle unpredictable workloads efficiently.

- Computer Vision Applications : Deploy image and video analysis models with optimized GPU inference for real-time processing.

- Audio Generation and Processing : Support audio synthesis and processing models with scalable GPU resources to meet demand.

- Batch Processing Workloads : Handle large-scale batch inference tasks efficiently with dynamic resource allocation.

FAQs

Inferless Alternatives

Not Diamond

AI meta-model router that intelligently selects the optimal large language model (LLM) for each query to maximize quality, reduce cost, and minimize latency.

Cerebrium

Serverless AI infrastructure platform enabling fast, scalable deployment and management of AI models with optimized performance and cost efficiency.

Predibase

Next-generation AI platform specializing in fine-tuning and deploying open-source small language models with unmatched speed and cost-efficiency.

FuriosaAI

High-performance, power-efficient AI accelerators designed for scalable inference in data centers, optimized for large language models and multimodal workloads.

Unify AI

A platform that streamlines access, comparison, and optimization of large language models through a unified API and dynamic routing.

TokenCounter

Browser-based token counting and cost estimation tool for multiple popular large language models (LLMs).

Cirrascale Cloud Services

High-performance cloud platform delivering scalable GPU-accelerated computing and storage optimized for AI, HPC, and generative workloads.

TrainLoop AI

A managed platform for fine-tuning reasoning models using reinforcement learning to deliver domain-specific, reliable AI performance.

Analytics of Inferless Website

🇺🇸 US: 22.86%

🇮🇳 IN: 9.47%

🇬🇧 GB: 9.18%

🇩🇪 DE: 8.81%

🇻🇳 VN: 7.36%

Others: 42.32%