Cerebrium

Serverless AI infrastructure platform enabling fast, scalable deployment and management of AI models with optimized performance and cost efficiency.

Community:

Product Overview

What is Cerebrium?

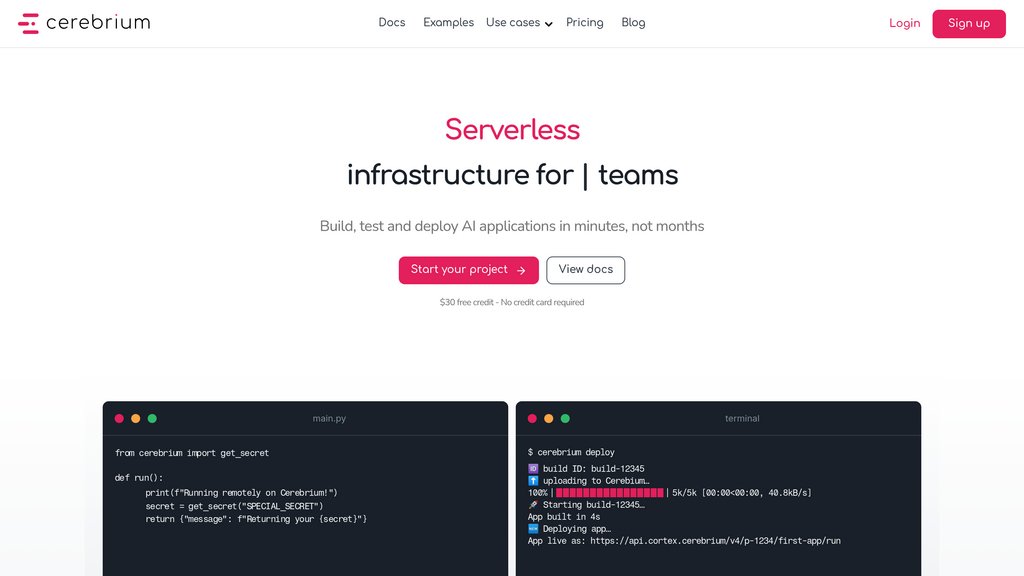

Cerebrium offers a comprehensive serverless infrastructure designed to simplify the building, deployment, and scaling of AI applications. It supports a wide range of GPU and CPU options, enabling users to run large-scale batch jobs, real-time voice applications, and complex image and video processing with minimal latency. The platform emphasizes rapid deployment, efficient autoscaling, and robust observability, ensuring applications remain performant and reliable under varying workloads. With enterprise-grade security compliance and real-time logging, Cerebrium caters to teams seeking to accelerate AI projects from prototype to production seamlessly.

Key Features

Serverless Autoscaling

Automatically scales AI workloads to handle traffic spikes and maintain fault-free operation without manual intervention.

Wide GPU Selection

Access to over a dozen GPU types including NVIDIA H100, A100, and L40s, tailored to different AI workloads for optimal cost and performance.

Low Latency & Fast Cold Starts

Ensures near-instantaneous inference readiness with cold start times under seconds and minimal added latency to requests.

Comprehensive Observability

Provides real-time logging, health metrics, and cost tracking to monitor deployments and optimize resource usage.

Enterprise Security

SOC 2 and HIPAA compliant infrastructure guarantees data privacy, security, and high availability.

Rapid Deployment

Deploy models from development to production in minutes using intuitive interfaces and pre-configured templates.

Use Cases

- Large Language Model Deployment : Run and scale LLMs efficiently with features like dynamic request batching and streaming outputs for real-time responsiveness.

- Voice Applications : Support voice-to-voice AI agents for customer support, sales, and content creation with ultra-low latency and high concurrency.

- Image and Video Processing : Leverage powerful GPUs and distributed caching for tasks such as digital twin creation, asset generation, and video analysis.

- Content Generation and Summarization : Use AI to generate, translate, and summarize text, audio, and video content across multiple languages and formats.

- Real-Time AI Services : Deliver interactive AI-powered applications with minimal delay, ensuring smooth user experiences at scale.

FAQs

Cerebrium Alternatives

Predibase

Next-generation AI platform specializing in fine-tuning and deploying open-source small language models with unmatched speed and cost-efficiency.

Not Diamond

AI meta-model router that intelligently selects the optimal large language model (LLM) for each query to maximize quality, reduce cost, and minimize latency.

FuriosaAI

High-performance, power-efficient AI accelerators designed for scalable inference in data centers, optimized for large language models and multimodal workloads.

Inferless

Serverless GPU platform enabling fast, scalable, and cost-efficient deployment of custom machine learning models with automatic autoscaling and low latency.

TokenCounter

Browser-based token counting and cost estimation tool for multiple popular large language models (LLMs).

Unify AI

A platform that streamlines access, comparison, and optimization of large language models through a unified API and dynamic routing.

Cirrascale Cloud Services

High-performance cloud platform delivering scalable GPU-accelerated computing and storage optimized for AI, HPC, and generative workloads.

TrainLoop AI

A managed platform for fine-tuning reasoning models using reinforcement learning to deliver domain-specific, reliable AI performance.

Analytics of Cerebrium Website

🇮🇳 IN: 28.35%

🇺🇸 US: 23.83%

🇳🇵 NP: 6.87%

🇩🇪 DE: 5.65%

🇻🇳 VN: 4.76%

Others: 30.53%