Laminar

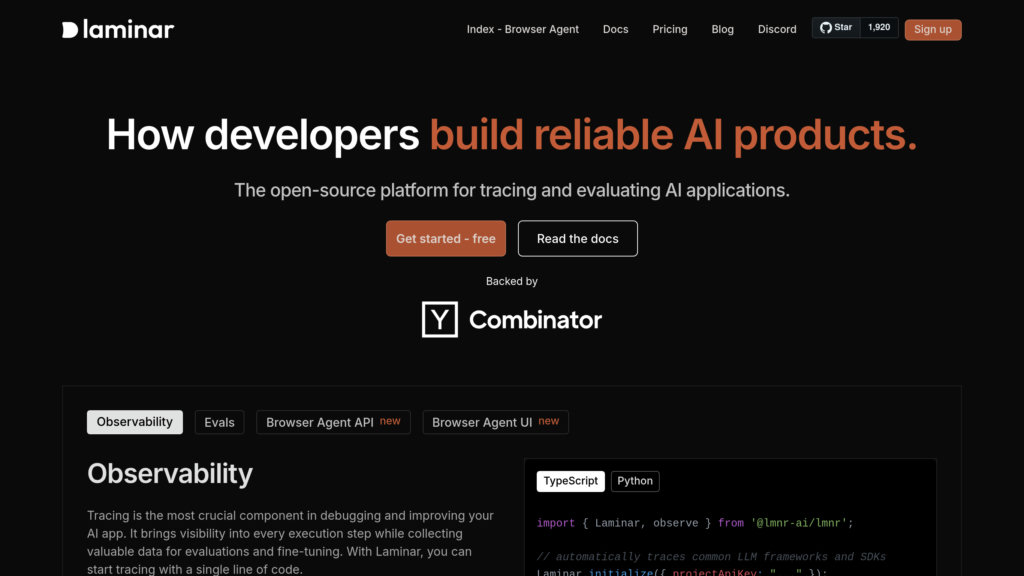

Open-source platform for tracing, evaluating, and analyzing AI applications with seamless LLM observability and tooling.

Community:

Product Overview

What is Laminar?

Laminar, also known as Laminar, is a comprehensive open-source platform designed to help developers build reliable AI products by providing deep observability and evaluation tools for Large Language Model (LLM) applications. It enables automatic tracing of AI frameworks and SDKs with minimal code, collects detailed execution data, and supports scalable evaluation and labeling workflows. Laminar’s high-performance Rust backend and modern architecture ensure low latency and efficient processing, while its rich UI offers trace visualization, dataset management, and advanced analytics. It is suitable for both self-hosting and managed cloud deployment.

Key Features

Automatic LLM Tracing

Instrument popular LLM SDKs and frameworks like OpenAI, Anthropic, LangChain, and more with just two lines of code to capture detailed execution traces.

Real-Time Observability

Collect and analyze trace data in real time with minimal performance overhead using a Rust-based backend and gRPC communication.

Evaluation and Labeling Automation

Run scalable automated evaluations and label spans to generate datasets for fine-tuning, prompt engineering, and quality tracking.

Comprehensive Trace Analytics

Visualize, search, and group traces and sessions via a powerful UI to gain insights into AI app behavior and performance.

Open-Source and Self-Hosting Friendly

Fully open-source with easy self-hosting options using Docker Compose, enabling customization and control over your AI observability stack.

Browser Agent Observability

Unique feature to record browser sessions synchronized with agent traces, enhancing debugging and user experience analysis.

Use Cases

- AI Application Debugging : Developers can trace and debug LLM-based features efficiently by visualizing execution flows and identifying bottlenecks.

- Performance Monitoring : Operations teams monitor latency, cost, token usage, and other metrics to optimize AI model deployments.

- Automated Model Evaluation : Data scientists automate evaluation workflows to track AI model accuracy and improve prompt engineering.

- Dataset Creation for Fine-Tuning : Generate labeled datasets from production traces to support continuous model improvement and training.

- User Interaction Analysis : Analyze user-agent interactions in browser environments to enhance AI-driven user experiences.

FAQs

Laminar Alternatives

Langtrace

Open-source observability platform designed to monitor, evaluate, and optimize large language model (LLM) applications with real-time insights and detailed tracing.

EdgeBit

Comprehensive software supply chain security platform that continuously monitors running code and automates vulnerability prioritization.

Treblle

API intelligence platform providing real-time monitoring, analytics, security, and documentation to streamline the entire API lifecycle.

OpenMeter

Real-time usage metering and flexible billing platform designed for AI, DevTool, and SaaS companies to enable scalable usage-based pricing and revenue maximization.

Releem

Automated MySQL performance monitoring and tuning tool that simplifies database management with real-time insights and actionable optimization recommendations.

Keywords AI

Full-stack LLM engineering platform enabling developers and PMs to build, monitor, and optimize AI products rapidly with advanced observability and prompt management.

Hoop.dev

Secure access gateway for databases and servers that simplifies infrastructure access with automated security and data masking.

OpenReplay

OpenReplay is an open-source session replay and analytics platform designed for developers and product teams, offering full data control through self-hosting and advanced user behavior insights.

Analytics of Laminar Website

🇺🇸 US: 67%

🇮🇳 IN: 14.48%

🇬🇧 GB: 6.96%

🇲🇽 MX: 3.67%

🇰🇷 KR: 3.03%

Others: 4.85%