Langtrace

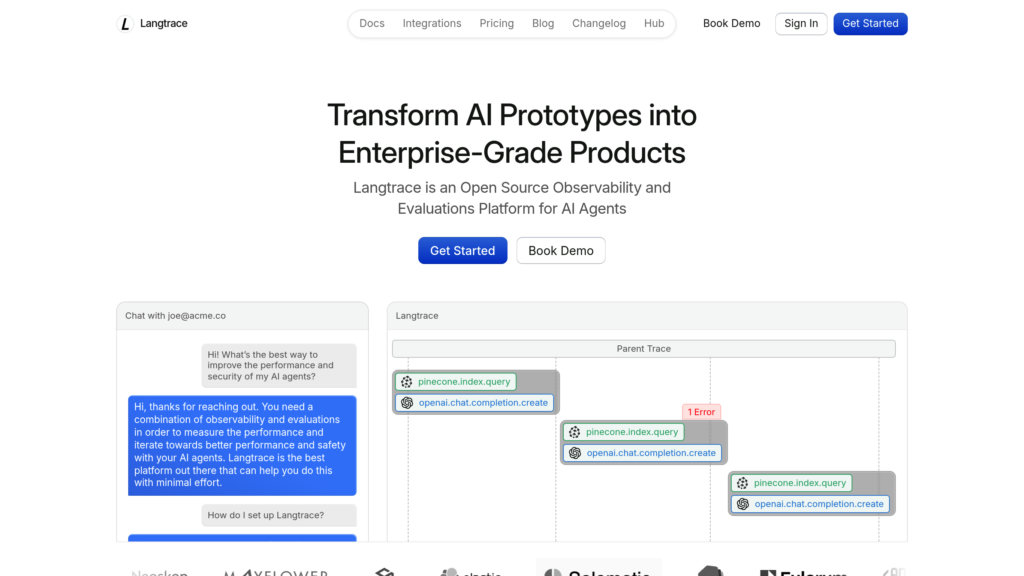

Open-source observability platform designed to monitor, evaluate, and optimize large language model (LLM) applications with real-time insights and detailed tracing.

Community:

Product Overview

What is Langtrace?

Langtrace is a developer-centric open-source observability tool that enhances the reliability and accuracy of AI-powered products, especially those built with large language models. It provides comprehensive tracing and monitoring by adhering to OpenTelemetry standards, enabling users to track API calls, latency, token usage, costs, and response accuracy. Langtrace supports integration with popular LLMs, frameworks, and vector databases, offering real-time dashboards and evaluation tools to debug and improve AI workflows. Its simple SDK setup supports Python and TypeScript, and it offers both managed cloud and self-hosted deployment options. Langtrace is widely adopted by developers aiming to transition from prototype demos to production-ready AI systems with high accuracy and reliability.

Key Features

OpenTelemetry-Based Tracing

Uses OpenTelemetry standards to provide detailed, standardized traces across LLMs, frameworks, and vector databases for deep observability.

Real-Time Monitoring and Analytics

Tracks key metrics including token usage, costs, latency, and accuracy with dynamic dashboards to optimize AI application performance.

Comprehensive Evaluation Tools

Supports manual and automated scoring of LLM outputs to measure and improve the accuracy and reliability of AI applications.

Multi-Language SDK Support

Lightweight SDKs available for Python and TypeScript enable easy integration with minimal code changes.

Flexible Deployment Options

Offers both a managed SaaS platform and a self-hosted version, allowing users to choose based on their infrastructure preferences.

Wide Integration Ecosystem

Compatible with over 40 LLM providers, vector databases, and AI frameworks, supporting complex AI workflows like AI agents and retrieval-augmented generation (RAG).

Use Cases

- LLM Application Observability : Developers can monitor and trace large language model API calls and workflows to identify bottlenecks and optimize performance.

- AI Agent and RAG Workflow Debugging : Provides visibility into multi-component AI systems, helping developers understand and improve query processing and response generation.

- Accuracy Improvement for AI Products : Enables systematic evaluation and feedback loops to increase the accuracy of AI-powered applications from prototype to production.

- Cost and Latency Management : Tracks token consumption and inference latency to help manage operational costs and improve user experience.

- Self-Hosted Observability for Privacy : Enterprises can deploy Langtrace on their own infrastructure to maintain data control and comply with security requirements.

FAQs

Langtrace Alternatives

Laminar

Open-source platform for tracing, evaluating, and analyzing AI applications with seamless LLM observability and tooling.

OpenMeter

Real-time usage metering and flexible billing platform designed for AI, DevTool, and SaaS companies to enable scalable usage-based pricing and revenue maximization.

EdgeBit

Comprehensive software supply chain security platform that continuously monitors running code and automates vulnerability prioritization.

Treblle

API intelligence platform providing real-time monitoring, analytics, security, and documentation to streamline the entire API lifecycle.

Releem

Automated MySQL performance monitoring and tuning tool that simplifies database management with real-time insights and actionable optimization recommendations.

Keywords AI

Full-stack LLM engineering platform enabling developers and PMs to build, monitor, and optimize AI products rapidly with advanced observability and prompt management.

Hoop.dev

Secure access gateway for databases and servers that simplifies infrastructure access with automated security and data masking.

OpenReplay

OpenReplay is an open-source session replay and analytics platform designed for developers and product teams, offering full data control through self-hosting and advanced user behavior insights.

Analytics of Langtrace Website

🇺🇸 US: 62.52%

🇮🇳 IN: 16.57%

🇬🇧 GB: 8.28%

🇧🇷 BR: 7.07%

🇻🇳 VN: 5.52%

Others: 0.04%