fixa

Open-source Python package for automated testing, evaluation, and observability of AI voice agents.

Community:

Product Overview

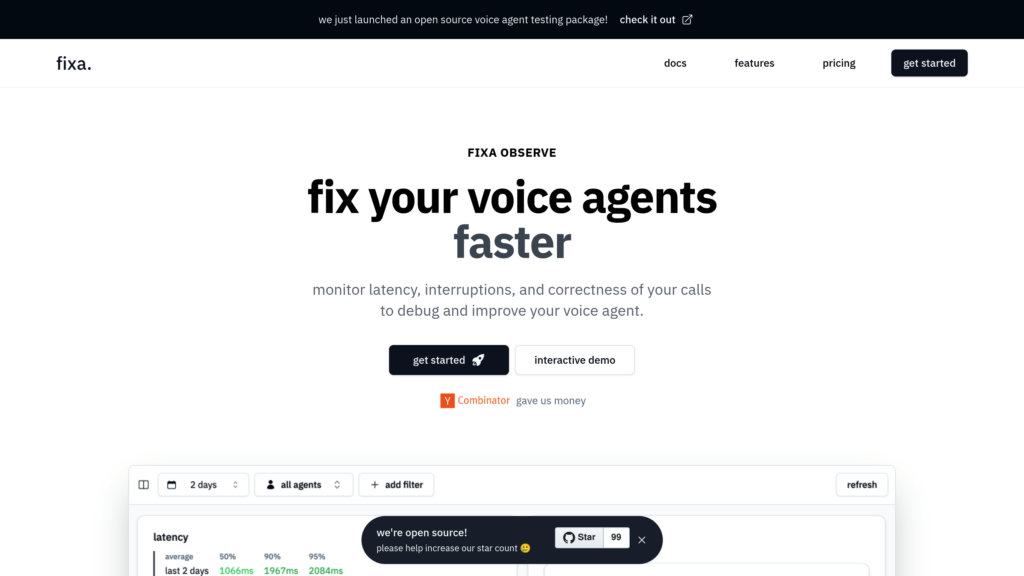

What is fixa?

fixa is an open-source platform designed to help developers test, monitor, and debug AI voice agents efficiently. It automates end-to-end testing by simulating calls to your voice agent using customizable test agents and scenarios, then evaluates conversations with large language models (LLMs). The platform tracks key metrics such as latency, interruptions, and correctness, enabling developers to pinpoint issues like hallucinations or transcription errors quickly. With integrations including Twilio for call initiation, Deepgram for transcription, Cartesia for text-to-speech, and OpenAI for evaluation, fixa offers a comprehensive toolkit for voice AI quality assurance and observability.

Key Features

Automated Voice Agent Testing

Simulate realistic phone calls to your voice agent using customizable test agents and scenarios to validate performance.

LLM-Powered Evaluation

Leverages large language models to automatically assess conversation quality and detect failures such as misunderstandings or missing confirmations.

Comprehensive Observability

Monitors latency metrics (p50, p90, p95), interruptions, and transcription accuracy to provide detailed insights into voice agent behavior.

Open Source and Extensible

Fully open-source Python package allowing users to integrate preferred APIs and customize testing and evaluation workflows.

Cloud Visualization Platform

Optional cloud service to visualize test results with audio playback, transcripts, failure pinpoints, and alerting via Slack.

Flexible Integration Stack

Built on top of Twilio, Deepgram, Cartesia, and OpenAI, with plans for more integrations to support diverse voice AI ecosystems.

Use Cases

- Voice Agent Quality Assurance : Run automated tests to ensure your AI voice assistant performs reliably in various conversational scenarios.

- Production Monitoring : Analyze live calls to detect and diagnose issues like latency spikes, interruptions, and incorrect responses in real time.

- Prompt and Conversation Debugging : Identify root causes of failures such as hallucinations or missing confirmations and receive actionable suggestions to improve prompts.

- Development and Iteration : Accelerate voice agent development cycles by integrating testing and evaluation into CI/CD pipelines.

- Team Collaboration and Alerts : Use Slack alerts and cloud dashboards to keep teams informed of voice agent health and quickly respond to issues.

FAQs

fixa Alternatives

Evidently AI

Open-source and cloud platform for evaluating, testing, and monitoring AI and ML models with extensive metrics and collaboration tools.

Ragas

Open-source framework for comprehensive evaluation and testing of Retrieval Augmented Generation (RAG) and Large Language Model (LLM) applications.

Confident AI

Comprehensive cloud platform for evaluating, benchmarking, and safeguarding LLM applications with customizable metrics and collaborative workflows.

LangWatch

End-to-end LLMops platform for monitoring, evaluating, and optimizing large language model applications with real-time insights and automated quality controls.

Cyara

Comprehensive CX assurance platform that automates testing and monitoring of customer journeys across voice, digital, and AI channels.

Ethiack

Comprehensive cybersecurity platform combining automated and human ethical hacking to continuously identify and manage vulnerabilities across digital assets.

Datafold

A unified data reliability platform that accelerates data migrations, automates testing, and monitors data quality across the entire data stack.

Elementary Data

A data observability platform designed for data and analytics engineers to monitor, detect, and resolve data quality issues efficiently within dbt pipelines and beyond.

Analytics of fixa Website

Others: 100%