LangWatch

End-to-end LLMops platform for monitoring, evaluating, and optimizing large language model applications with real-time insights and automated quality controls.

Community:

Product Overview

What is LangWatch?

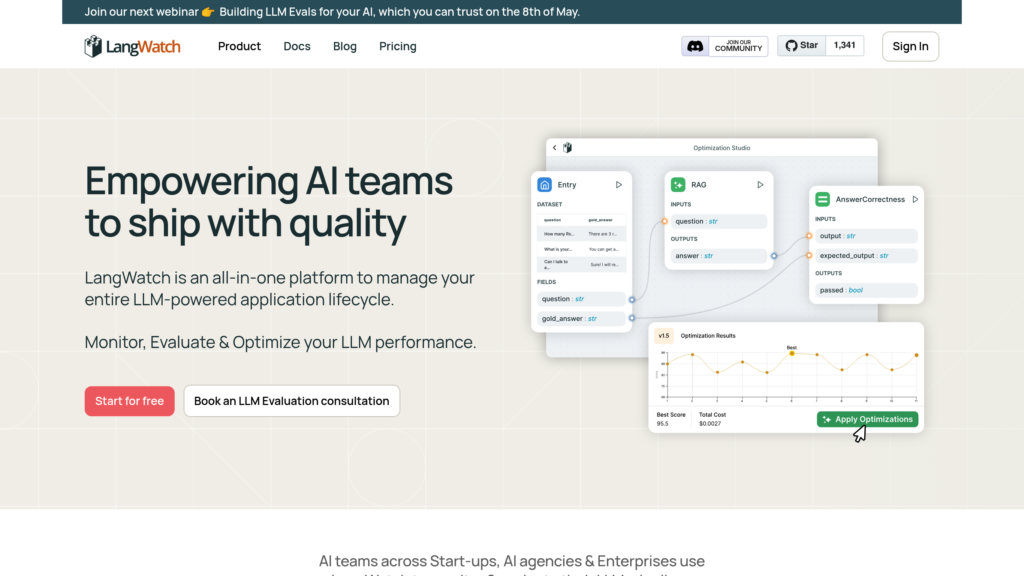

LangWatch is a comprehensive LLM operations platform designed to help AI teams manage the entire lifecycle of large language model (LLM) applications. It integrates seamlessly with any tech stack to provide monitoring, evaluation, and optimization tools that ensure AI quality, safety, and performance. By automating quality checks, enabling human-in-the-loop evaluations, and offering detailed analytics, LangWatch helps businesses reduce AI risks such as hallucinations and data leaks while accelerating deployment from proof-of-concept to production. The platform supports continuous improvement through visual experiment tracking, customizable evaluations, and alerting systems, making it ideal for teams aiming to build reliable and compliant AI products.

Key Features

Comprehensive LLM Monitoring

Automatically logs inputs, outputs, latency, costs, and internal AI decision steps to provide full observability and facilitate debugging and auditing.

Automated Quality Evaluations

Runs real-time, customizable quality checks and safety assessments with over 30 built-in evaluators and supports human expert reviews.

Optimization Studio

Visual drag-and-drop interface to create, test, and refine LLM pipelines with automatic prompt generation and experiment version control.

Alerts and Dataset Automation

Real-time alerts on performance regressions and the ability to automatically generate datasets from annotated feedback for continuous model improvement.

Custom Analytics and Business Metrics

Enables building tailored dashboards and graphs to track AI performance indicators like response quality, cost, and user interactions.

Enterprise-Ready and Flexible Deployment

Open-source, model-agnostic platform with ISO compliance, role-based access control, and options for self-hosting or cloud deployment.

Use Cases

- AI Quality Assurance : Ensure consistent, safe, and accurate AI outputs by automating quality checks and involving domain experts in evaluation workflows.

- Risk Mitigation : Detect and prevent AI hallucinations, data leaks, and off-topic responses to safeguard sensitive information and brand reputation.

- Performance Monitoring : Track cost, latency, and error rates over time with customizable analytics to optimize AI system efficiency and user experience.

- Model Optimization : Use the Optimization Studio to iterate on prompt engineering and pipeline configurations, accelerating deployment from prototype to production.

- Human-in-the-Loop Evaluation : Integrate domain experts seamlessly to provide manual feedback and annotations, improving AI reliability and closing the feedback loop.

FAQs

LangWatch Alternatives

Cyara

Comprehensive CX assurance platform that automates testing and monitoring of customer journeys across voice, digital, and AI channels.

Ethiack

Comprehensive cybersecurity platform combining automated and human ethical hacking to continuously identify and manage vulnerabilities across digital assets.

Datafold

A unified data reliability platform that accelerates data migrations, automates testing, and monitors data quality across the entire data stack.

Elementary Data

A data observability platform designed for data and analytics engineers to monitor, detect, and resolve data quality issues efficiently within dbt pipelines and beyond.

Raga AI

Comprehensive AI testing platform that detects, diagnoses, and fixes issues across multiple AI modalities to accelerate development and reduce risks.

Aporia

Comprehensive platform delivering customizable guardrails and observability to ensure secure, reliable, and compliant AI applications.

OpenLIT

Open-source AI engineering platform providing end-to-end observability, prompt management, and security for Generative AI and LLM applications.

Openlayer

Enterprise platform for comprehensive AI system evaluation, monitoring, and governance from development to production.

Analytics of LangWatch Website

🇺🇸 US: 30.3%

🇮🇳 IN: 8.07%

🇧🇷 BR: 6.17%

🇳🇱 NL: 4.31%

🇻🇳 VN: 4.27%

Others: 46.88%