Tensorfuse

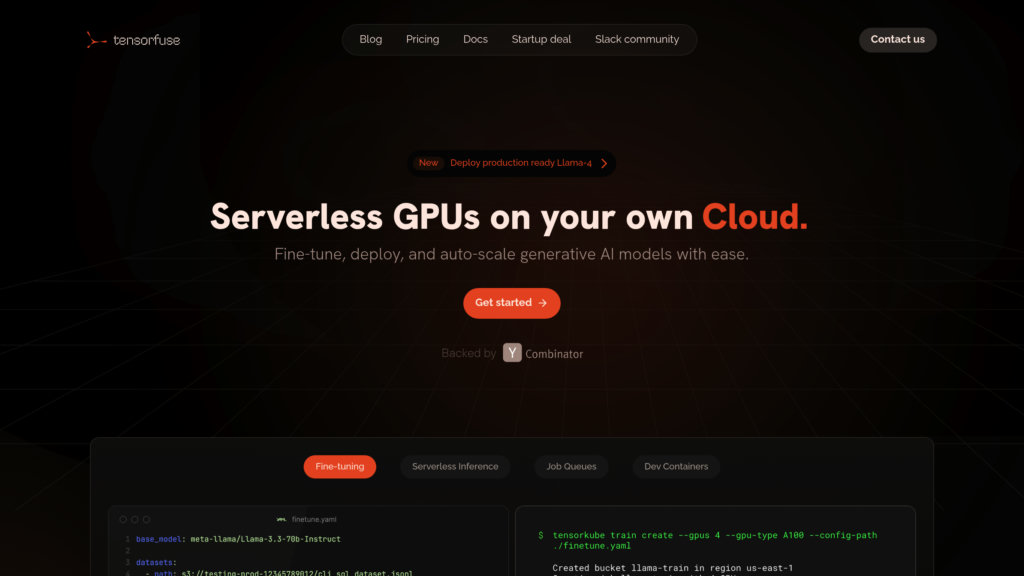

Serverless GPU runtime enabling seamless deployment, fine-tuning, and autoscaling of AI models on private cloud infrastructure.

Community:

Product Overview

What is Tensorfuse?

Tensorfuse is a cutting-edge platform that simplifies running generative AI models by managing Kubernetes clusters on your own cloud infrastructure. It enables serverless GPU usage with autoscaling capabilities that scale resources to zero when idle and rapidly scale up to meet demand. Tensorfuse supports diverse hardware including GPUs (A10G, A100, H100), TPUs, Trainium/Inferentia chips, and FPGAs, allowing flexible and efficient model deployment. The platform offers OpenAI-compatible APIs, serverless training jobs, and built-in finetuning methods like LoRA and QLoRA, all abstracting away complex infrastructure management to accelerate AI development and reduce cloud GPU costs.

Key Features

Serverless GPU Management

Automatically scales GPU resources from zero to handle concurrent workloads without manual intervention.

Multi-Hardware Support

Runs AI workloads on various hardware including NVIDIA GPUs, TPUs, Trainium/Inferentia chips, and FPGAs.

OpenAI-Compatible API

Expose your AI models through APIs compatible with OpenAI standards for easy integration.

Built-in Model Finetuning

Supports advanced finetuning techniques like LoRA, QLoRA, and reinforcement learning with out-of-the-box tools.

Custom Docker and Networking

Optimized Docker implementation for faster cold starts and a custom Istio-based networking layer for multi-node GPU inference and training.

Developer Productivity Tools

GPU devcontainers with hot reloading enable rapid experimentation directly on GPUs without complex setup.

Use Cases

- AI Model Deployment : Deploy custom AI models quickly on your private cloud with autoscaling serverless GPUs.

- Generative AI Applications : Run inference and batch jobs for generative AI models like Llama3, Qwen, and Stable Diffusion efficiently.

- Model Finetuning and Training : Perform serverless training and fine-tuning of large models using advanced techniques without managing environments.

- Cost-Effective Cloud GPU Usage : Reduce cloud GPU expenses by up to 30% through intelligent autoscaling and efficient resource management.

- DevOps Automation : Automate deployment workflows with GitHub Actions integration and simplify infrastructure management.

FAQs

Tensorfuse Alternatives

Modelbit

Infrastructure-as-code platform for seamless deployment, scaling, and management of machine learning models in production.

Pipekit

A scalable control plane for managing and optimizing Argo Workflows on Kubernetes, enabling efficient data and CI pipeline operations.

Brainboard

A collaborative platform for visually designing, generating, and managing cloud infrastructure with automated Terraform code generation.

Movestax

An all-in-one serverless-first cloud platform designed to simplify app deployment, serverless databases, workflow automation, and infrastructure management for modern developers.

UbiOps

A flexible platform for deploying, managing, and orchestrating AI and ML models across cloud, on-premise, and hybrid environments.

Release

Platform for creating and managing on-demand, ephemeral environments that accelerate development workflows and optimize DevOps costs.

CTO.ai

A developer-centric platform offering workflow automation, CI/CD pipelines, and cloud infrastructure orchestration to streamline software delivery.

Codesphere

Developer-centric cloud platform enabling seamless deployment, autoscaling, and management of complex applications with minimal configuration.

Analytics of Tensorfuse Website

🇺🇸 US: 45.34%

🇮🇳 IN: 43.61%

🇻🇳 VN: 11.03%

Others: 0.01%