Openlayer

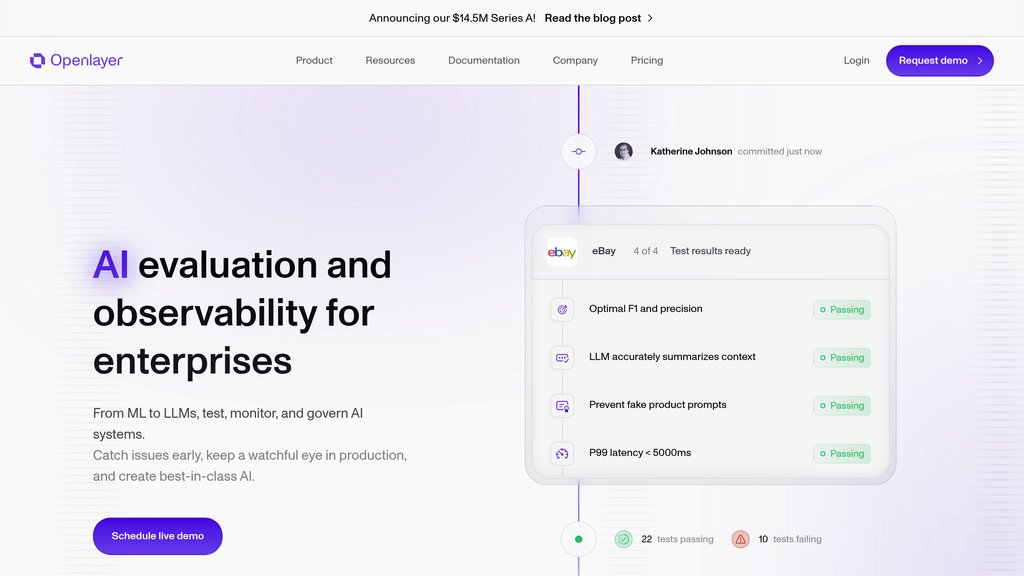

Enterprise platform for comprehensive AI system evaluation, monitoring, and governance from development to production.

Community:

Product Overview

What is Openlayer?

Openlayer is a robust enterprise platform designed to streamline the entire AI lifecycle, enabling teams to test, monitor, and govern AI systems ranging from machine learning models to large language models. It provides real-time observability and automated evaluation to detect issues early and maintain AI reliability in production environments. Openlayer integrates seamlessly into existing workflows, supporting collaboration across engineering, data science, and product teams to build trustworthy and high-performing AI applications.

Key Features

Comprehensive AI Evaluation

Offers over 100 customizable tests and version comparisons to validate AI models and catch regressions before deployment.

Real-Time Monitoring and Observability

Enables continuous tracking of AI system performance in production with detailed tracing and request-level insights.

Seamless Workflow Integration

Supports SDKs, CLI tools, and CI/CD pipelines integration for automated testing and validation within existing development processes.

Collaborative Platform

Facilitates alignment among engineers, data scientists, and product managers by making model evaluation transparent and accessible.

Explainability and Debugging Tools

Provides explainability features to understand model predictions and troubleshoot issues with clarity and context.

Scalable and Flexible

Handles AI systems of all sizes, from prototypes to large-scale production deployments, ensuring smooth transitions and reliability.

Use Cases

- AI Model Validation : Data science teams use Openlayer to rigorously test models against diverse criteria to ensure robustness before release.

- Production AI Monitoring : Operations teams monitor live AI systems to detect anomalies and performance drops in real time, enabling quick fixes.

- Continuous Integration for AI : Development teams integrate Openlayer into CI/CD pipelines to automate testing and maintain consistent AI quality across versions.

- Cross-Functional Collaboration : Product managers and engineers collaborate on defining evaluation metrics and interpreting results to align on AI system goals.

- Explainability and Compliance : Organizations leverage explainability tools to meet regulatory requirements and increase trust in AI decisions.

FAQs

Openlayer Alternatives

Relyable

Comprehensive testing and monitoring platform for AI voice agents, enabling rapid deployment and production reliability through automated evaluation and real-time performance tracking.

OpenLIT

Open-source AI engineering platform providing end-to-end observability, prompt management, and security for Generative AI and LLM applications.

Atla AI

Advanced AI evaluation platform delivering customizable, high-accuracy assessments of generative AI outputs to ensure safety and reliability.

Aporia

Comprehensive platform delivering customizable guardrails and observability to ensure secure, reliable, and compliant AI applications.

Raga AI

Comprehensive AI testing platform that detects, diagnoses, and fixes issues across multiple AI modalities to accelerate development and reduce risks.

HoneyHive

Comprehensive platform for testing, monitoring, and optimizing AI agents with end-to-end observability and evaluation capabilities.

Decipher AI

AI-powered session replay analysis platform that automatically detects bugs, UX issues, and user behavior insights with rich technical context.

Elementary Data

A data observability platform designed for data and analytics engineers to monitor, detect, and resolve data quality issues efficiently within dbt pipelines and beyond.

Analytics of Openlayer Website

🇮🇳 IN: 30.85%

🇺🇸 US: 25.67%

🇳🇱 NL: 12.08%

🇮🇩 ID: 11.55%

🇻🇳 VN: 8.62%

Others: 11.22%