LM Arena (Chatbot Arena)

Open-source, community-driven platform for live benchmarking and evaluation of large language models (LLMs) using crowdsourced pairwise comparisons and Elo ratings.

Product Overview

What is LM Arena (Chatbot Arena)?

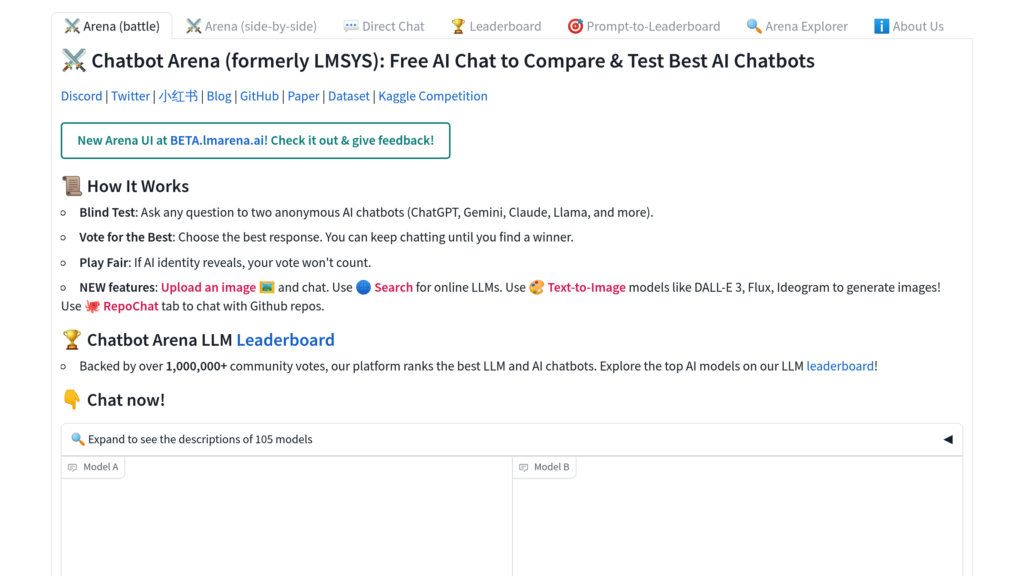

LM Arena, also known as Chatbot Arena, is an open-source platform developed by LMSYS and UC Berkeley SkyLab to advance the development and understanding of large language models through live, transparent, and community-driven evaluations. It enables users to interact with and compare multiple LLMs side-by-side in anonymous battles, collecting votes to rank models using the Elo rating system. The platform supports a wide range of publicly released models, including both open-weight and commercial APIs, and continuously updates its leaderboard based on real-world user feedback. LM Arena emphasizes transparency, open science, and collaboration by sharing datasets, evaluation tools, and infrastructure openly on GitHub.

Key Features

Crowdsourced Pairwise Model Comparison

Users engage in anonymous, randomized battles between two LLMs, voting on the better response to generate reliable comparative data.

Elo Rating System for Model Ranking

Adopts the widely recognized Elo rating system to provide dynamic, statistically sound rankings of LLM performance.

Open-Source Infrastructure

All platform components including frontend, backend, evaluation pipelines, and ranking algorithms are open source and publicly available.

Live and Continuous Evaluation

Real-time collection of user prompts and votes ensures up-to-date benchmarking reflecting current model capabilities and real-world use cases.

Support for Publicly Released Models

Includes models that are open-weight, publicly accessible via APIs, or available as services, ensuring transparency and reproducibility.

Community Engagement and Transparency

Encourages broad participation and openly shares user preference data and prompts to foster collaborative AI research.

Use Cases

- LLM Performance Benchmarking : Researchers and developers can evaluate and compare the effectiveness of various large language models under real-world conditions.

- Model Selection for Deployment : Organizations can identify the best-performing LLMs for their specific applications by reviewing live community-driven rankings.

- Open Science and Research : Academics and AI practitioners can access shared datasets and tools to conduct reproducible research and improve model development.

- Community Feedback for Model Improvement : Model providers can gather anonymized user feedback and voting data to refine and enhance their AI systems before official releases.

FAQs

LM Arena (Chatbot Arena) Alternatives

RunPod

A cloud computing platform optimized for AI workloads, offering scalable GPU resources for training, fine-tuning, and deploying AI models.

Geekbench

A cross-platform benchmarking tool measuring CPU and GPU performance across various devices and operating systems.

MiroMind

A research assistant that leverages open-source models for deep data analysis, web search, and code generation.

Sakana AI

Tokyo-based AI research company pioneering nature-inspired foundation models and fully automated AI-driven scientific discovery.

Ballpark

A user research platform that simplifies capturing high-quality feedback on product ideas, marketing copy, designs, and prototypes with versatile testing methods and rich media insights.

Userbrain

Unmoderated remote user testing platform streamlining UX research through a global tester pool and automated analysis tools.

MindSpore

An all-scenario, open-source deep learning framework designed for easy development, efficient execution, and unified deployment across cloud, edge, and device environments.

无问芯穹

Enterprise-grade heterogeneous computing platform enabling efficient deployment of large models across diverse chip architectures.

Analytics of LM Arena (Chatbot Arena) Website

🇷🇺 RU: 12.95%

🇮🇳 IN: 11.97%

🇺🇸 US: 8.32%

🇨🇳 CN: 5.79%

🇧🇷 BR: 3.16%

Others: 57.81%