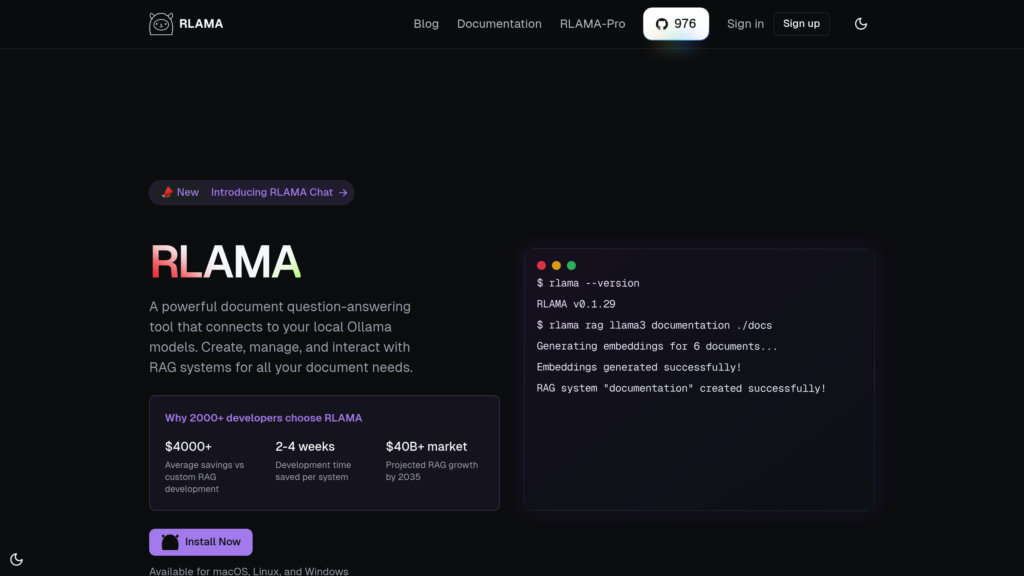

RLAMA

Open-source, local-first document question-answering tool integrating with Ollama and Hugging Face models for powerful RAG systems.

Community:

Product Overview

What is RLAMA?

RLAMA is a robust AI-driven platform designed to create, manage, and interact with Retrieval-Augmented Generation (RAG) systems tailored for document-based question answering. It operates fully locally, ensuring privacy and data security by processing documents and embeddings on the user's machine. RLAMA supports a wide range of document formats and advanced semantic chunking strategies to optimize context retrieval. It seamlessly integrates with local Ollama models and Hugging Face's extensive model hub, offering flexible AI model usage. Additionally, RLAMA provides features like web crawling for direct RAG creation from websites, directory watching for automatic updates, and an HTTP API for easy integration into other applications. Its cross-platform support covers macOS, Linux, and Windows, making it accessible for developers and enterprises alike.

Key Features

Local-First Processing

All document parsing, embedding generation, and querying happen locally with no data sent externally, ensuring full privacy and security.

Multi-Format Document Support

Supports a wide variety of document types including text, markdown, PDF, Word, Excel, code files, and more for versatile knowledge base creation.

Advanced Semantic Chunking

Employs intelligent chunking strategies (fixed, semantic, hierarchical, hybrid) to segment documents optimally for retrieval.

Integration with Ollama and Hugging Face

Seamlessly connects with local Ollama models and supports over 45,000 GGUF models from Hugging Face for flexible AI model selection.

Web Crawling and Directory Watching

Automatically create and update RAG systems from websites and local directories, enabling dynamic and up-to-date knowledge bases.

API Server and CLI Tools

Provides a RESTful HTTP API and a comprehensive command-line interface for easy integration and workflow automation.

Use Cases

- Technical Documentation Querying : Developers and engineers can quickly search and query project docs, manuals, and specifications locally.

- Private Knowledge Bases : Organizations can build secure, private RAG systems for sensitive documents without exposing data externally.

- Research Assistance : Researchers and students can index and query academic papers, textbooks, and study materials efficiently.

- Enterprise Data Integration : With RLAMA Pro, enterprises can connect RAG systems to data warehouses like Snowflake for comprehensive data querying.

- Custom AI Agent Creation : Users can build specialized AI agents with multiple roles and tools to perform complex document-related tasks.

FAQs

RLAMA Alternatives

ALTAR

Smart workspace that organizes notes, images, and links into a structured knowledge base, helping users capture inspiration and turn ideas into action.

Solab.ai

An interactive knowledge-sharing platform combining community-driven Q&A with AI-assisted responses for collaborative learning.

Good Face Project

Cloud-based platform offering comprehensive formulation, regulatory compliance, and product lifecycle management for cosmetic innovators.

Refly

Flexible content creation platform featuring a free-form canvas, multi-threaded dialogues, and integrated knowledge management for seamless idea-to-content workflows.

LlamaIndex

A flexible framework for building enterprise knowledge assistants by connecting large language models to diverse data sources.

DocsBot AI

AI-powered chatbot platform delivering instant, accurate answers by training custom bots on your own documentation and content.

Wikiwand

A modernized, AI-enhanced interface for Wikipedia that improves readability, navigation, and knowledge consumption.

Grokipedia

AI-powered encyclopedia providing real-time knowledge synthesis and fact-verified articles with over 880,000 entries covering diverse topics from breaking news to technical subjects.

Analytics of RLAMA Website

🇺🇸 US: 100%

Others: 0%